Summary (TL;DR):

The industry narrative around deepfake attacks focuses on how convincing the technology has become. That framing misses the point. Deepfakes succeed because they activate the same behavioral patterns organizations have spent years reinforcing: defer to authority, act on urgency, don’t question leadership. The real vulnerability isn’t synthetic media — it’s a culture of compliance that never taught people to pause, verify, and think critically under pressure. Until security leaders address that, no detection tool will close the gap.

Introduction

In March 2025, a finance director at a multinational firm in Singapore joined what looked like a routine video call. The CFO was on screen. Other executives appeared alongside. The request was clear: authorize a $499,000 transfer for a confidential acquisition. The finance director complied. Every face on that call was AI-generated. Every voice was synthetic. The entire meeting was fabricated using publicly available footage of the real executives. (Source: Brightside AI, October 2025)

The industry response to incidents like this tends to follow a predictable pattern. Analysts point to the sophistication of the deepfake. Vendors announce new detection features. Training providers add a module on synthetic media. And the underlying assumption goes unchallenged: that these attacks succeed because the technology is so advanced that people can’t tell real from fake.

That assumption is wrong. Or at best, incomplete.

The deeper question isn’t why people can’t spot deepfakes. It’s why they don’t even try.

The confidence gap nobody wants to talk about

The IRONSCALES Fall 2025 Threat Report surfaced a striking contradiction. When surveyed, 99% of security leaders said they were confident in their organization’s deepfake defenses. In simulated detection exercises, only 8.4% of organizations scored above 80%. The average score was 44%. (Source: IRONSCALES, Fall 2025 Threat Report)

Read that again. Near-universal confidence. Below-average capability.

This isn’t a technology problem. It’s a perception problem — and it runs deeper than most organizations realize.

Over half of surveyed organizations reported financial losses tied to deepfake or AI voice fraud in the past year, with an average loss exceeding $280,000 per incident. Nearly 20% reported losses of $500,000 or more. (Source: IRONSCALES, Fall 2025 Threat Report)

Meanwhile, a 2024 meta-analysis synthesizing 56 peer-reviewed studies with over 86,000 participants found that human accuracy at identifying deepfakes is roughly 55% — statistically indistinguishable from a coin flip. The iProov 2025 Blindspot Study pushed this further: only 0.1% of participants correctly identified all deepfake samples shown to them. (Sources: Diel et al., 2024 meta-analysis; iProov Deepfake Blindspot Study, 2025)

So when an industry says “we’re confident,” but the data says “we’re guessing,” the question becomes: where does that false confidence come from?

We think it comes from confusing awareness with capability. And that confusion is the most dangerous gap in security today.

You trained them to obey. Attackers noticed.

Here’s the argument most deepfake articles avoid: the same corporate culture that demands fast execution and unquestioning compliance with leadership is the culture that deepfake attacks are designed to exploit.

Consider what every successful deepfake fraud has in common. Not the quality of the synthetic media — but the behavioral pattern it activates.

Authority. A request from someone perceived as senior leadership.

Urgency. A compressed timeline that discourages deliberation.

Confidentiality. A framing that discourages the target from checking with others.

These aren’t technical exploits. They’re cultural ones. And in most organizations, these patterns aren’t bugs. They’re features. Employees are rewarded for responsiveness, penalized for delays, and socialized to avoid challenging their superiors — especially in high-pressure moments.

The Arup incident in 2024 illustrates this precisely. The employee who transferred $25 million was on a video call with what appeared to be the CFO and several colleagues — all deepfaked using publicly available conference footage. The attackers created a confidential atmosphere with a tight deadline. The employee didn’t pause, didn’t verify through a separate channel, didn’t ask a question only the real CFO would know. They did what the organization had trained them to do: follow instructions from leadership, quickly and without friction. (Sources: CNN, February 2024; Hong Kong Police briefing)

The Verizon 2025 Data Breach Investigations Report confirms the pattern at scale: approximately 60% of all confirmed breaches involved a human action — a click, a socially engineered call, or the misdelivery of sensitive data. And the report’s own researchers noted that phishing failure rates appeared unaffected by traditional training. (Source: Verizon, 2025 DBIR)

The data tells a clear story. Awareness alone doesn’t change behavior under pressure. Compliance-driven training teaches people what to know. It doesn’t teach them how to act when trust is being weaponized in real time.

Key insight: detection is a dead end. Verification is the defensible skill.

The industry’s instinct has been to respond to deepfakes with detection: tools that analyze audio artifacts, flag video inconsistencies, or score media for signs of synthetic generation. The logic seems sound — if deepfakes are convincing, build technology that sees through them.

But the field data is sobering. Research from CSIRO found that leading detection tools dropped below 50% accuracy when confronted with deepfakes produced by tools they weren’t trained on. Commercial detection platforms that demonstrate 96% accuracy in lab conditions fall to somewhere between 50% and 65% in real-world deployment. (Source: Brightside AI, October 2025, citing CSIRO research)

Detection tools will improve. But so will generative AI. This is an arms race with no stable equilibrium, and the defender is structurally disadvantaged because attackers only need to succeed once.

The more durable approach is to stop trying to teach people to spot fakes and start building the organizational reflex to verify everything that matters — regardless of how real it looks or sounds.

This is what the Ferrari incident demonstrated in July 2024. When an executive received WhatsApp messages and then a voice call from someone impersonating CEO Benedetto Vigna — complete with his southern Italian accent — the executive didn’t try to analyze whether the voice was synthetic. He asked a question only the real Vigna could answer: the title of a book he’d recommended days earlier. The impersonator hung up immediately. (Source: Bloomberg, July 2024; Fortune, July 2024)

That wasn’t a detection success. It was a verification success. The executive had been conditioned — by culture, by process, or by instinct — to treat trust as something that needs to be earned in the moment, not assumed based on appearances.

The difference between Arup and Ferrari wasn’t the quality of the deepfake. It was the behavior of the person on the receiving end. One followed the script. The other broke it.

The compliance trap: why 88% of training programs still aren’t working

According to the IRONSCALES 2025 report, 88% of organizations now offer some form of deepfake-related training. And yet detection rates remain dismal. (Source: IRONSCALES, Fall 2025 Threat Report)

This shouldn’t surprise anyone who has sat through a typical security awareness program. Most are built for compliance, not resilience. They measure completion rates, not behavioral change. They tell employees what deepfakes are, maybe show a few examples, and end with a quiz. Then the organization checks a box and moves on.

The Proofpoint 2024 State of the Phish Report found that 68% of employees knowingly break security policy despite having received training. (Source: Proofpoint, 2024 State of the Phish Report) That finding alone should end the debate about whether awareness equals capability. People know the rules. They break them anyway — because the real-world pressures of urgency, authority, and social proof override what they learned in a 20-minute module three months ago.

The problem gets worse when you consider how few programs address the specific dynamics deepfakes exploit. Fewer than 20% of training programs cover voice phishing or deepfakes at all. Only 7.5% personalize training to individual risk levels — despite evidence that roughly 8% of employees drive 80% of incidents. And about 30% of employees describe their current training as boring and ineffective. (Source: Brightside AI, Security Awareness Training Statistics 2025)

This isn’t a volume problem. Organizations don’t need more training hours. They need a fundamentally different model — one that moves from telling people about risk to building their capacity to respond to it under pressure, in realistic conditions, across the channels attackers actually use.

What a defensible human layer looks like

If deepfake attacks exploit behavioral patterns — authority, urgency, trust — then the defense has to operate at the behavioral level too. Not as an afterthought bolted onto a compliance program, but as a core operational capability that security leaders can measure, track, and improve over time.

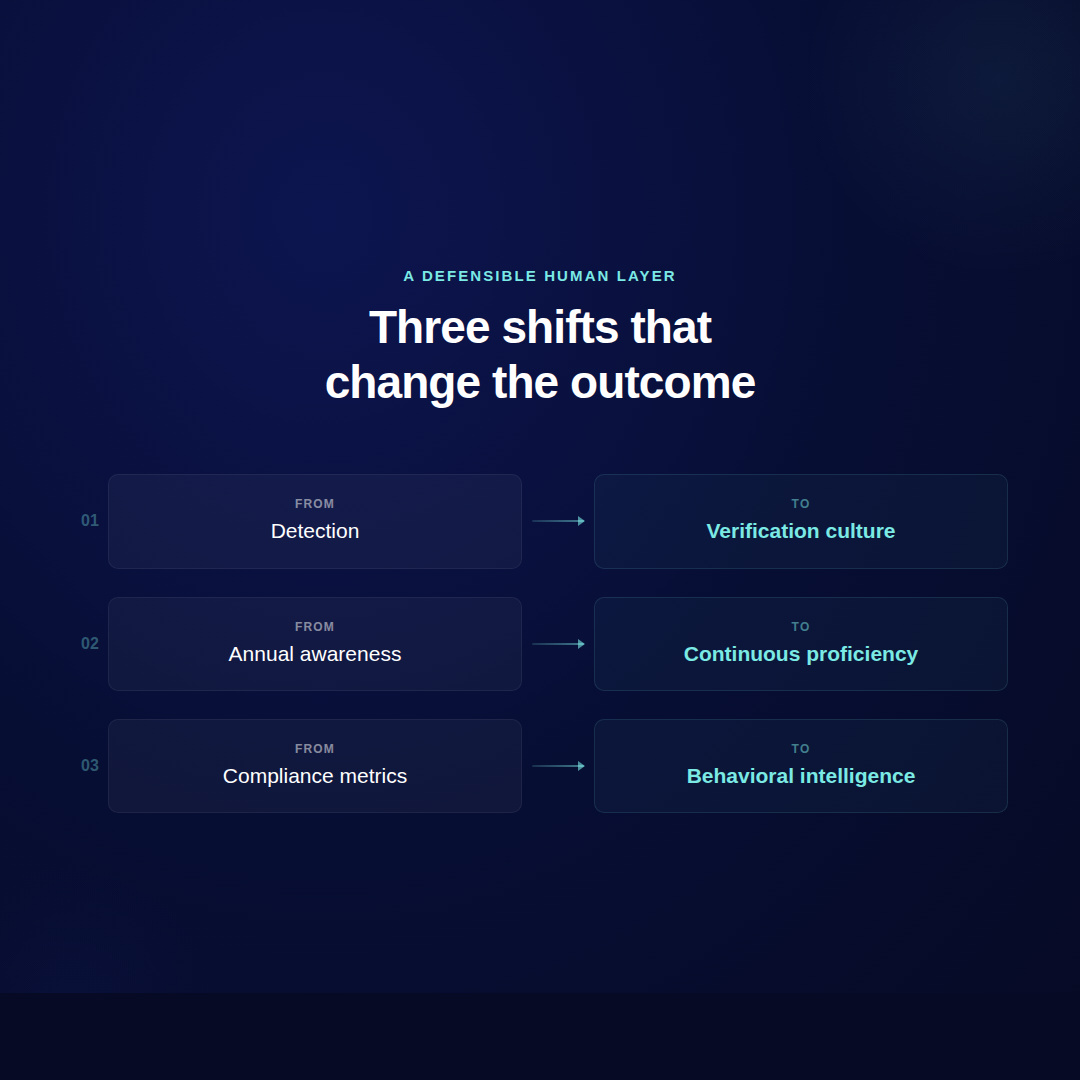

We see this requiring three shifts:

From detection to verification culture. Instead of training employees to spot synthetic artifacts (a losing proposition at scale), organizations should build verification into every sensitive workflow. Multi-person authorization for financial transactions. Out-of-band confirmation for any request involving money, access, or data — regardless of who appears to be asking. A cultural norm that says questioning a request from a senior leader isn’t insubordination. It’s proficiency.

From annual awareness to continuous behavioral proficiency. A single training event, no matter how well-designed, decays within weeks. Employees who complete training in January face threats every other day of the year. What works is continuous exposure to realistic scenarios — across email, voice, and video — that adapt to each person’s role, risk profile, and behavioral patterns. This is what turns awareness into muscle memory.

From compliance metrics to behavioral intelligence. Completion rates tell you who sat through a program. They don’t tell you who would pause before authorizing a wire transfer on a Friday afternoon when someone who looks like the CFO is asking. The metrics that matter are behavioral: how quickly people report suspicious interactions, how they respond under simulated pressure, where the gaps cluster by role, department, or scenario type. This is the intelligence that lets security leaders make decisions, allocate resources, and demonstrate measurable progress to the board.

At Zepo Intelligence, this is what we build. Our platform runs hyper-personalized, multivector simulations across the channels attackers actually use — not to scare people, but to build the kind of durable behavioral capability that holds up when the pressure is real. We measure how people perform in the face of risk, not how many slides they clicked through.

Conclusion

Deepfake-enabled fraud losses exceeded $200 million in the first quarter of 2025 alone. (Source: Wall Street Journal / The D&O Diary, August 2025) The numbers will keep growing. The technology will keep improving.

The organizations that pull ahead won’t be the ones with the best deepfake detector. They’ll be the ones where a finance director feels confident enough to say, “Sorry, I need to verify your identity” — and where that response is treated as a security asset, not a professional risk.

Human behavior isn’t the problem. It’s the key to the solution. But only if you stop training people to comply and start building their capacity to think.

If you’re rethinking how your organization prepares for AI-powered social engineering, we’d welcome the conversation.